Bazı kısa notlar

Kullanım

1. Kafka Producer ve Consumer'a şu alan eklenir. Böylece Schema Registry sunucusuna erişebilirler

configProps.put("schema.registry.url", "http://127.0.0.1:8081");2. avsc uzantılı Avro dosyaları tanımlanır. avsc dosyasında sürüm numarası belirtilir.

3. Projemizde Avro Maven Plugin tanımlanır. Bu plugin avro dosyalarını Java koduna çevirir

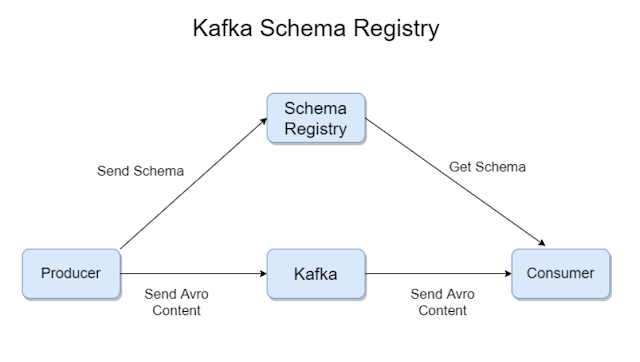

Schema Registry Veri Akışı

Applications that use Confluent’s Avro serialisation library for Kafka also have to use Confluent’s schema registry. It is a separate process that provides a REST API to store and retrieve schemas and also check for different kinds of compatibility types. The schema registry persists the schemas into a special Kafka topic called ‘_schemas’ which contains one Avro schema file per message-type and version.How does a Kafka consumer know which schema to retrieve from the registry when receiving a message? Confluent’s documentation specifies a wire format, which includes an ID which can be used to retrieve the Avro schema file from the schema registries REST API.When using Confluent’s schema registry, the following steps have to be taken in order to write and later read an event form a Kafka topic in Avro binary encoded format:1. The publisher (event writer) has to call the schema registry’s REST API to upload an Avro schema file (once per schema version)2. The schema registry checks for compatibility and returns an ID on success or an error if for example the schema is not compatible with earlier versions3. The publisher encodes the data according to Confluent’s wire format (including schema ID and Avro binary encoding of the data)4. The consumer reads the raw data from the Kafka topic5. The consumer calls the schema registry’s REST API to download the Avro schema file with the ID provided in the first few bytes of the data6. The consumer decodes the message using the downloaded schemaConfluents kafka-avro-serializer library implements this behavior.

Veri akışı şeklen şöyle

Schema ID

Açıklaması şöyle

One of the ways in which the size of the data being sent to Kafka is reduced is when the producer first tries to send an event it sends the schema for it to Schema Registry and the schema registry then returns a four-byte schema id. The producer then sends the data along with the schema ID to Kafka. The consumer then extracts the schema ID and gets the respective schema from the registry and performs validation. In this way, the overall content size of the data is reduced considerably.

Schema ID değeri Kafka mesajının hemen başındaki 4 byte'tır. Şeklen şöyle

Açıklaması şöyle

The biggest drawback of this system is in my opinion that both producer and consumer depend on not only Kafka itself but also the schema registry to be available at runtime.If the consumer tries to read an Avro encoded message from a Kafka topic, but cannot reach the schema registries REST API to download the required version of the schema, an Exception is thrown and, in the worst case, the message gets discarded.This is true even for the case that consumer or producer application have a local copy of the current schema file available. A common misconception at willhaben was that, if the consumer has a local copy of the current Avro schema, it uses this local schema file to decode the message. This is not supported by Confluents kafka-avro-serializer library at this time. The local Avro schema file is only be used to generate Java classes for a schema (like described before). These classes can be used to handle the consumed data, but are not used for serialisation or deserialisation.You should also be aware that the schema registry depends on the _schema Kafka topic to persists its data.If this topic gets lost somehow, there is no way to restore the IDs generated when publishing schema files to the registries REST API and therefore the messages on the Kafka topics referencing these schemas cannot be decoded.

Unit Test

Kolaylaştıran Component Tests isimli bir kütüphane burada

Schema Sorgulama

Şöyle yaparız

curl -X GET -H "Content-Type: application/json" http://localhost:8081/subjects/

Elimizde ismi "customer-topic" olan bir topic varsa çıktı olarak şunu alırız

["customer-topic-key","customer-topic-value"]

Eğer value değerinin şemasını görmek istersek şöyle yaparız

curl -X GET -H "Content-Type: application/json"

http://localhost:8081/subjects/customer-topic-value/versions/1

SerDe

Bazı terminoloji şöyle

With respect to the schema-registry, there are few terminologies.1. subject — refers to the topic name in Kafka. (default strategy, for more info, look for subject naming strategies)2. id — unique id is allocated to each schema stored in registry.3. version — each schema can have multiple versions associated to it per subject.

Hiç yorum yok:

Yorum Gönder